通过命令行创建纠删码规则

- 首先,需要创建 erasure-code-profile ,当然,也可以使用默认的 erasure-code-profile ,列出现有的 erasure-code-profile :

12# ceph osd erasure-code-profile lsdefault - 查看指定erasure-code-profile 的详细内容:

12345# ceph osd erasure-code-profile get defaultk=2m=1plugin=jerasuretechnique=reed_sol_van - 自定义erasure-code-profile , 创建一个只用hdd的 erasure-code-profile:

1# ceph osd erasure-code-profile set hdd-3-2 k=3 m=2 crush-device-class=hdd

可用的选项有:- crush-root: the name of the CRUSH node to place data under [default:

default]. - crush-failure-domain(故障域): the CRUSH type to separate erasure-coded shards across [default:

host]. - crush-device-class(设备分类): the device class to place data on [default: none, meaning all devices are used].

- k and m (and, for the

lrcplugin, l): these determine the number of erasure code shards, affecting the resulting CRUSH rule.

- crush-root: the name of the CRUSH node to place data under [default:

- 根据erasure-code-profile 创建crush rule:

12# ceph osd crush rule create-erasure erasure_hdd hdd-3-2created rule erasure_hdd at 5 - 查看crush rule:

1234567891011121314151617181920212223242526272829303132# ceph osd crush rule dump erasure_hdd{"rule_id": 5,"rule_name": "erasure_hdd","ruleset": 5,"type": 3,"min_size": 3,"max_size": 5,"steps": [{"op": "set_chooseleaf_tries","num": 5},{"op": "set_choose_tries","num": 100},{"op": "take","item": -2,"item_name": "default~hdd"},{"op": "chooseleaf_indep","num": 0,"type": "host"},{"op": "emit"}]} - 创建一个使用纠删码规则的pool

12# ceph osd pool create test-bigdata 256 256 erasure hdd-3-2 erasure_hddpool 'test-bigdata' created

语法: osd pool create <poolname> <int[0-]> {<int[0-]>} {replicated|erasure} [<erasure_code_profile>] {<rule>} {<int>}

尽管crush rule 也是根据erasure_code_profile来创建的,但是这里创建纠删码pool的时候,还是需要明确指定erasure_code_profile的

参考: http://docs.ceph.com/docs/master/rados/operations/pools/ - 调优:

12# ceph osd pool set test-bigdata fast_read 1set pool 24 fast_read to 1

目前,这个fast_read 之针对纠删码池有效的 - 如果需要在该pool创建rbd,则需要:

- 参考: http://docs.ceph.com/docs/master/rados/operations/erasure-code/

12# ceph osd pool set test-bigdata allow_ec_overwrites trueset pool 24 allow_ec_overwrites to true - 创建一个replication pool来做cache tier

1234567891011# ceph osd pool create test-bigdata-cache-tier 128pool 'test-bigdata-cache-tier' created# ceph osd tier add test-bigdata test-bigdata-cache-tierpool 'test-bigdata-cache-tier' is now (or already was) a tier of 'test-bigdata'# ceph osd tier cache-modetest-bigdata-cache-tier writebackset cache-mode for pool 'test-bigdata-cache-tier' to writeback# ceph osd tier set-overlay test-bigdata test-bigdata-cache-tieroverlay for 'test-bigdata' is now (or already was) 'test-bigdata-cache-tier'

其实,不仅纠删码池可以做cache tier,replication 池子也能做cache tier,因为,我们可能有一批ssd盘,我们就可以在ssd上创建pool来充当sas盘的cache tier以提高性能;结合纠删码、replication、sas、ssd,我们可以做出多种不同性能的存储用以应对不同的场景。 - 然后 ceph 会提示: 1 cache pools are missing hit_sets , 还要设置 hit_set_count 和 hit_set_type

12345# ceph osd pool set test-bigdata-cache-tier hit_set_count 1set pool 29 hit_set_count to 1# ceph osd pool set test-bigdata-cache-tier hit_set_type bloomset pool 29 hit_set_type to bloom

- 参考: http://docs.ceph.com/docs/master/rados/operations/erasure-code/

通过编辑crushmap来添加规则

参考:

- http://docs.ceph.com/docs/master/rados/operations/crush-map/#creating-a-rule-for-an-erasure-coded-pool

- http://blog.csdn.net/yangyimincn/article/details/50810229

- http://www.cnblogs.com/chris-cp/p/4791217.html

- 纠删码原理

- http://www.360doc.com/content/17/0814/11/46248428_679071890.shtml

实战中的问题:

- 12个SAS在 60MB/s 的速度evict的时候,磁盘都很慢了,每个盘达到100左右的tps, 20MB/s左右的读写;比较坑的是,我基本没法控制evict的速度,只好静静地等待evict结束

- evict 的同时还在promote, promote的速度倒是可控,但是 osd_tier_promote_max_bytes_sec 默认是5242880 字节(并不算很大); 问题:池子已经没有写入了,为何还在evict和promote?

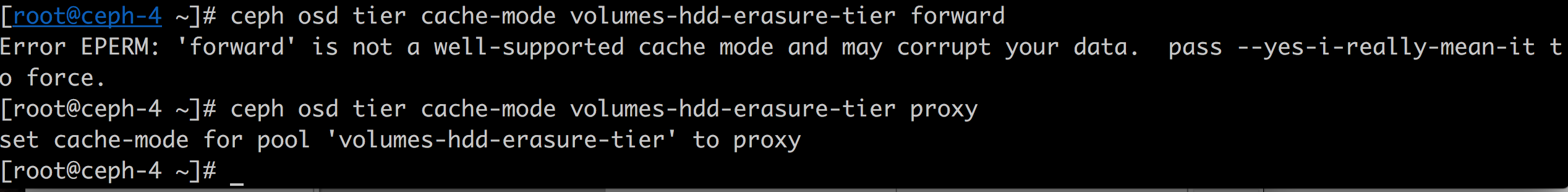

- 修改cache-mode试试: 按说,修改cache-mode为proxy时,就不应该再出现evict和promote了

果然,修改之后,ceph -s 立刻就看不到evict和promote了 🙂 - 查看cache-mode:

1ceph osd pool ls detail|grep cache_mode